So you want to use Object Storage

Tips and lessons learned from building systems directly against object stores

Over the past 19 years (S3 was launched on March 14th 2006, as the first public AWS service), object storage has become the gold standard for storing large amounts of data in the cloud. It's reliable, reasonably cheap, reasonably fast, and requires no special incantations to deploy. Best of all, it offers a straightforward HTTP-based interface with clear semantics (see NFS horrors).

Reading this, you might come up with an intriguing idea—why not run your entire database on object storage? You're in good company: Snowflake, Warpstream, Neon, SlateDB, Pinecone, TurboPuffer, Turso, RocksDB’s tiered storage, Delta Lake, Iceberg, and many others already do that in some form or another.

If you start building a system that reads a lot of data from object storage, you might find yourself spending a lot of time just reading data, with costs going up and users complaining about high latency, all the while your monitoring tells you you’re actually getting great latency from your cloud provider — so what is this about?

In this post I’ll try and highlight some common issues I’ve run into, in the hope of helping you understand how to avoid them by designing and building better systems around object storage.

Tail latencies will eat you up

Roughly speaking, the latency of systems like object storage tend to have a lognormal distribution (please come and teach me on BlueSky, always happy to learn), and while it mostly behaves nicely and stays within reasonable latency, it has a tendency to have very long tail. These are large and complex systems, and one request might touch dozens of machines, network switches and disks, and every one of them might have a transient issue affecting overall latency.

The latency of each individual action compounds, and as an almost unavoidable fact of distributed systems, sometimes we’ll get slow operations. It might be a faulty network switch, a garbage collection stop in a process, or just a busy disk. That fact is also true of our client systems - the more reads we make the more likely it is that one of them will be slow, cascading onto our own clients.

Hedging

On every request we have to roll a latency dice, and if we’re unlucky, some switch will misroute our data and we’ll have to wait a few hundreds of milliseconds or more. But with most object storage providers allowing us to send thousands of requests, we can just try again!

But when should we try again? If we wait for the (slow) response, we’ve already missed our chance and there’s really no point. I’ve seen a few alternate approaches:

-

Immediately send two (or more) requests. Yeah, it’ll cost you twice as much, but suddenly what used to be your annoying p99 latency is suddenly p99.99. Instead of one slow request out of 100, its now one out of 10,000! Already much much better. And we can increase our confidence in that by just sending more requests, depending on our cost sensitivity and overall load the storage system can handle.

-

Wait p95 (latency) before sending another request. We can decide on a certain acceptable latency (I’ve typically seen the p95 of the target service, either pre-measured or dynamic), and send another identical request if we exceed it. Given the latency distribution and the nature of distributed systems, some requests might never return and we can significantly reduce the likelihood of such events, with only marginally more work.

-

Try different endpoints. Depending on your setup, you may be able to hit different servers serving the same data. The less infrastructure they share with each other, the more likely it is that their latency won’t correlate. Depending on the server implementation, we can even tell the slower one to stop processing the request once we have a response from the other.

These ideas aren't new. There are many papers, blogs and implementations that mention them long before I ever wrote my first line of code.

Demo Time

I wrote a short rust program to demonstrate the idea. But instead of making actual requests, we sample a lognormal distribution with μ=4.7 and σ=0.5.

┌───────────────────────────────────────┬──────────┬────────┬────────┬────────┬────────┬─────────┐

│ Strategy │ Overhead │ p50 │ p75 │ p90 │ p95 │ p99 │

├───────────────────────────────────────┼──────────┼────────┼────────┼────────┼────────┼─────────┤

│ No hedging │ 0 │ 110.26 │ 154.18 │ 250.32 │ 354.10 │ 1134.17 │

│ Hedge 2 requests │ 100000 │ 83.51 │ 109.71 │ 159.82 │ 207.61 │ 446.27 │

│ Wait 250ms before hedging │ 4977 │ 106.69 │ 145.75 │ 211.49 │ 242.44 │ 444.60 │

│ Randomly hedge 0.05% │ 4938 │ 108.24 │ 151.83 │ 247.57 │ 344.62 │ 1057.38 │

└───────────────────────────────────────┴──────────┴────────┴────────┴────────┴────────┴─────────┘

The first strategy gives us a baseline. With no hedging at all, we make 0 additional requests and we can get a sense for the overall distribution.

The second block shows a very aggressive strategy - for every request we make we send an additional request, returning the fastest one. We can immediately see a significant improvement, with our maximum latency down by over 50%, and even our p50 improved by ~20%. But the cost here is significant, effectively double.

The third strategy hedges requests only when they exceed 250ms (around our p90 latency). Lower percentiles remain largely unaffected since we don't hedge those requests. However, both p99 and p100 show dramatic improvements—nearly matching the more aggressive strategy—while increasing costs by just 5%.

Caching

As we’ve already established, object storage latency can vary widely, and costs can add up really quickly. Most systems tend to have a subset of data that is accessed frequently, often much smaller than the overall data stored in the system. If we store that subset in a cache, we can improve latencies — but at what cost?

Let’s assume a very simple cache setup where we execute medium sized reads of ~8MB (Parquet pages, Lucene indexes, etc.) from a much larger dataset, and want to hold a cache of ~100GB.

I’ll use AWS pricing here because I’m most familiar with their offering, but I suggest you repeat this exercise with your cloud provider of choice. The conclusions might be different!

100GB of EBS gp3 storage costs $8/month and provides 125MB/s of throughput, which should be more than enough for us. We can discount our at-rest S3 cost from our caching costs since that’s our system of record, but reads will cost us $0.0000004 per request.

That comes out to $0.011 of EBS cost an hour, so assuming data is always in cache, we’ll need only 3 requests a second to make the EBS cache cheaper! There are many other possible configurations, and depending on your workload you might have different priorities, but I’m sure that going through this exercise with your workload in mind will be useful.

Another significant benefit of caching is the ability to specialize your hardware. You can run your CPU-intensive application on nodes with the fastest CPU you can find, and let dedicated caching nodes with large disks and a lot of RAM serve as a layer in front of your storage.

I highly recommend this paper which makes a much deeper dive into different options and consideration, and includes references to what choices different real world systems made.

Horizontal Scaling

Rather than using a single connection to read an object, split the read across multiple connections. Every object storage provider offers a range read API, so if you know the file size, you can read different parts simultaneously by making multiple requests for different non overlapping ranges. AWS even encourages you to do this, providing specific recommendations for read sizes. If you're running on servers with high network throughput capacity, this parallel reading approach is essential for maximizing your available bandwidth.

This point also holds true in the case you decided to use a cache, especially if its a distributed one. If you have to read a lot of data quickly, reading it over the network from multiple cache nodes at the same time can provide huge throughput improvements.

Putting it all together

There’s no silver bullet for every workload, and there are many factors that go into the design options we discussed today. Modeling and measuring system behavior is a must, it can help us validate our mental model of the system, but maybe even more powerful is the ability to make educated guesses about its behavior given certain changes. The tool I knocked up here makes many assumptions on how object storage will act, your specific system and configuration might behave differently, and you should verify your assumptions in the real world.

Taking advantage of the cloud's unique features can provide significant benefits. Cloud infrastructure lets us fine-tune hardware decisions with incredible precision, ensuring each service runs on hardware that best matches its specific needs and characteristics.

I think the most important lesson I learned building on top of Object Storage over the past few years is that it’s not just another disk. It’s a (relatively) new primitive that behaves quite differently. Giving us a lot of control at the edge of our fingertips, not hidden behind obscure kernel configurations.

Recommended posts

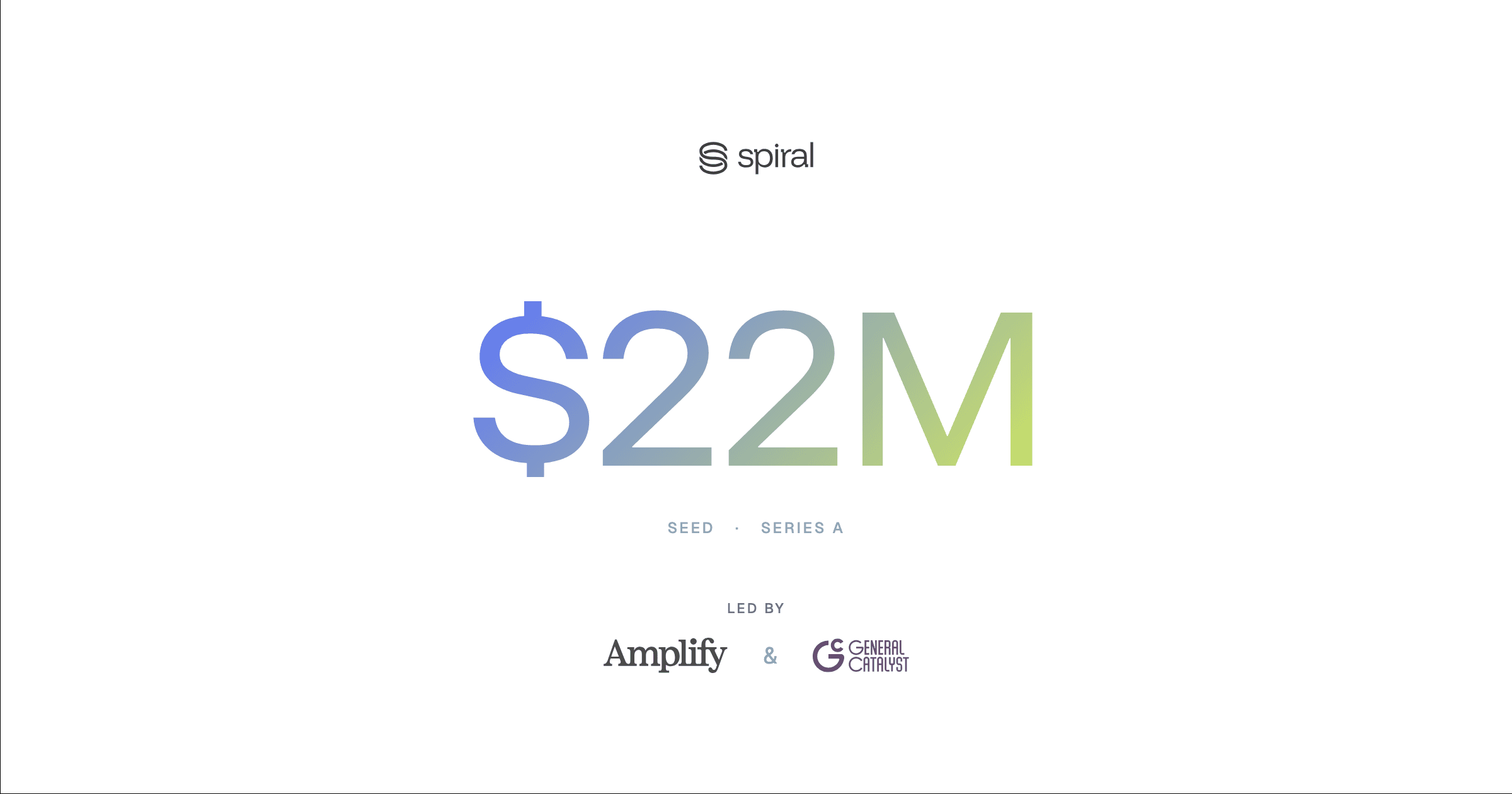

Announcing Spiral

Data 3.0, with backing from the best

Vortex: a Linux Foundation Project

Building the Future of Open Source, Columnar Storage

Vortex on Ice

Using Vortex to accelerate Apache Iceberg queries up to 4x